This blog is a preview of our Malign Interference and Crypto Report. Discover the role crypto plays in facilitating disinformation campaigns worldwide, and how analysts can use crypto transaction tracing to expose and disrupt malign interference efforts.

Malign Interference and Crypto

How crypto transaction tracing can expose and disrupt malign influence efforts

Does the disinformation landscape have a crypto nexus?

In the last several years, malign foreign state and non-state actors have conducted systemic influence campaigns in order to sow chaos, division, and confusion, and interfere with democratic institutions and processes. Historically, these activities are most impactful in the run-up to elections, and 2024 is shaping up to be the largest global election year in history with nearly half the world’s population set to hold national elections.

Although the spread of disinformation is not new, government agencies have observed novel tactics being employed to facilitate these campaigns. The 2019 Mueller Report famously exposed Russian government interference in the 2016 presidential election cycle, which saw the theft and publication of sensitive documents intended to influence election results. A perhaps less widely known finding from the report was that the technology and infrastructure implements used to hack the Hillary Rodham Clinton campaign, Democratic National Committee, and Democratic Congressional Campaign Committee were purchased with nearly $100K in Bitcoin.

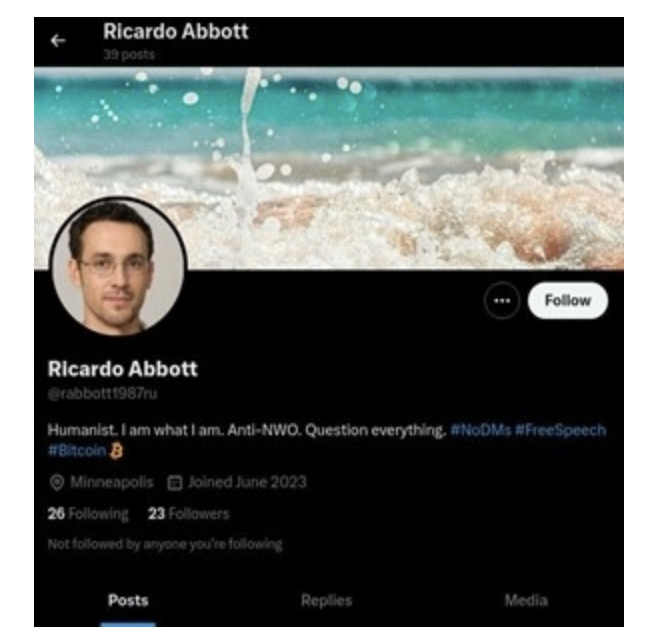

Government officials have since discovered similar disinformation campaigns with a crypto dimension leading up to the 2020 election, and others have unfolded in the intervening years. Most recently, the U.S. Department of Justice announced the disruption of a covert Russian government-operated social media bot farm that used elements of AI to create fictitious social media profiles — often purporting to belong to individuals in the United States — which the operators then used to promote disinformation and messages in support of Russian government objectives. Among the profiles of 968 Kremlin-run X accounts leveraged by the bot farm, many included crypto-related messages in their profiles.

The social media bot farm obtained control of two domains that they then used to create email accounts, which allowed them to register their fictitious accounts on X. According to records obtained by the FBI and detailed in the affidavit, the actor used bitcoin to purchase these domains on Namecheap, a US-based domain name registrar and web hosting company.

Additionally, the U.S. Treasury Department’s Office of Foreign Assets Control (OFAC) sanctioned many individuals and entities for their part in malign interference, including affiliated crypto addresses as identifiers in its designations. Below are some examples.

OFAC’s sanctions related to disinformation, with a crypto nexus

|

September 2020 Russian Nationals supporting Internet Research Agency (IRA): Following on its 2018 designation of IRA for 2016 election interference, OFAC sanctioned three IRA employees — Artem Lifshits, Anton Andreyev, and Darya Aslanova — who supported the organization’s crypto accounts used to fund activities tied to malign influence operations worldwide. Several crypto addresses were included in the designation. The same month, the DOJ filed a criminal complaint against Lifshits, alleging he was a manager for Project Lakhta — a Russia-based group known for engaging in election interference — and took part in a conspiracy to use stolen identities of U.S. persons to open fraudulent accounts at banking and cryptocurrency exchanges in the victims’ names. April 2021 SouthFront and other media outlets facilitating disinformation: OFAC sanctioned SouthFront, a disinformation website registered in Russia that receives direction from the Federal Security Service (FSB), a Russian intelligence agency. OFAC included three affiliated crypto addresses in the designation, which SouthFront used to solicit donations. September 2022 Facilitators of Russia’s aggression in Ukraine: Among other groups, OFAC sanctioned Task Force Rusich, a neo-Nazi paramilitary group that participated in combat alongside Russia’s military in Ukraine. Task Force Rusich solicited crypto donations via social media channels, which were used to fund military purchases and the spread of disinformation. OFAC included five affiliated crypto addresses in the designation. March 2024 Actors supporting Kremlin-directed malign influence efforts: Russian nationals Ilya Andreevich Gambashidze and Nikolai Aleksandrovich Tupikin, along with their companies, assisted the Russian government in foreign malign campaigns, including deceiving voters around the world to undermine trust in their governments. OFAC included two crypto addresses in the designation for Gambashidze. |

Disinformation is a global challenge requiring international coordination to disrupt, and the EU’s European Commission recently launched a campaign to boost awareness of the risks disinformation poses. It shared that 68% of European citizens “agree that they often encounter news or information they believe misrepresent reality or are false.” Adding complexity, artificial intelligence (AI) has become increasingly sophisticated and accessible, making high-quality deepfake videos ever easier to generate. Experts no longer question whether AI will impact elections worldwide, but just how devastating its effects might be.

It’s also worth noting that disinformation campaigns are not confined to the transatlantic community. Russian-language influence is notably prevalent in Latin America, while Chinese influence operations are prominent in the Asia-Pacific region. If analysts and policymakers worldwide aim to meet these challenges, they need to understand not only the messaging being used, but what technologies and implements enable these campaigns in the first place. That’s where cryptocurrency transaction tracing can help. Much like fiat, crypto is used to finance disinformation campaigns and pay for infrastructure. However, crypto is based on blockchain technology — a fully traceable system — which means that crypto transactions are transparent financing that connects people, places, and things. Using crypto tracing technology, investigators can connect transactions to groups and individuals and discover who’s behind these campaigns, as well as who’s supporting them.

Technology plays an irrefutable role in the spread of disinformation, and nefarious actors wouldn’t be able to execute these campaigns without specific infrastructure and services. While many illicit or underground services are used to facilitate disinformation, so are some licit services, which means that bad actors using them may not attract scrutiny.

What on-chain activity reveals about malign interference

As the United States faces a critical presidential election, vigilance is key for understanding and disrupting the inevitable onslaught of disinformation. German Marshall Fund (GMF) is a nonpartisan American public policy think tank championing democratic values, and the organization’s Alliance for Securing Democracy provides innovative tools, methodologies, and expert analysis in support of the mission.

“Global information operations are facilitated by a disaggregated network of service providers, online influencers, and digital mercenaries, many of whom rely on emerging technologies to fund or profit from their illicit activities,” says Bret Schafer, Senior Fellow, Media and Digital Disinformation at Alliance for Securing Democracy. “Understanding the nexus of malign finance and malign influence is therefore critical to disrupting disinformation campaigns, especially in an era when emerging technology has made it easier and cheaper for both state and non-state actors to undermine the integrity of the online information space.”

Investigative resources for analysts and policymakers

Here are some tools that GMF’s Alliance for Securing Democracy has developed to aid a broader understanding of and investigations into disinformation:

- Authoritarian Interference Tracker: Captures the Russian and Chinese governments’ attempts to undermine democracy in over 40 transatlantic countries since 2000, with a focus on information manipulation, cyber operations, malign finance, civil society subversion, and economic coercion.

- Hamilton 2.0 Dashboard: Provides a summary analysis of narratives and topics that Russian, Chinese, and Iranian government officials and state-backed media promote on Telegram, YouTube, Facebook, Instagram, state-sponsored news websites, and official press releases and transcripts each governments’ ministry of foreign affairs publishes. Offers a social data search tool for conducting keyword searches across articles, social media platforms, and archived tweets.

- The Information Laundromat: Jointly created by GMF’s Alliance for Securing Democracy, the University of Amsterdam (UvA), and the Institute for Strategic Dialogue (ISD), the Information Laundromat is an open-source tool for uncovering content and metadata similarities between and among websites.

The following organizations also offer useful research and tools for gaining a deeper understanding of dis- and misinformation campaigns:

- Bellingcat is a group of investigators, researchers, and citizen journalists who share a passion for open source research. It shares its latest news on a variety of global topics including disinformation, and offers guides that others can use to inform their own investigations.

- DFRLab is an organization at the Atlantic Council with technical and policy expertise on disinformation and related topics. It produces open source (OSINT) research on disinformation, online harms, foreign interference, and more, and shares its latest research on those topics.

- Graphika is a SaaS company that “creates large-scale explorable maps of social media landscapes and detects critical narratives circulating throughout their communities” and shares its latest research and investigative findings.

As evident by all these resources, tracking influence operations requires ongoing monitoring. In this report, we’ll examine:

- Actors and groups who’ve spread disinformation

- The services and tools they’ve used to do it

- The role that crypto has played

- What these trends could signal for future disinformation campaigns

- And how public sector agencies can use crypto transaction tracing to disrupt disinformation networks.

Download your copy today.